|

I'm a first-year M.S. student at at Seoul National University advised by Prof. Jonghyun Choi. My research focuses on vision-language-action (VLA) models for open-vocabulary mobile manipulation, with an emphasis on enabling robots to adapt and operate robustly in dynamic, continuously changing real-world environments. I received my B.S. in Computer Science from Yonsei University under the supervision of Prof. Jonghyun Choi. My prior research includes continual learning and generative model–based adaptation, which has provided a foundation for my current work on adaptive embodied intelligence and robotics. Recently, my primary research interest is on self-evolving robot agents—agents that can autonomously improve their perception, reasoning, and action policies through interaction with the environment over long time horizons. |

|

|

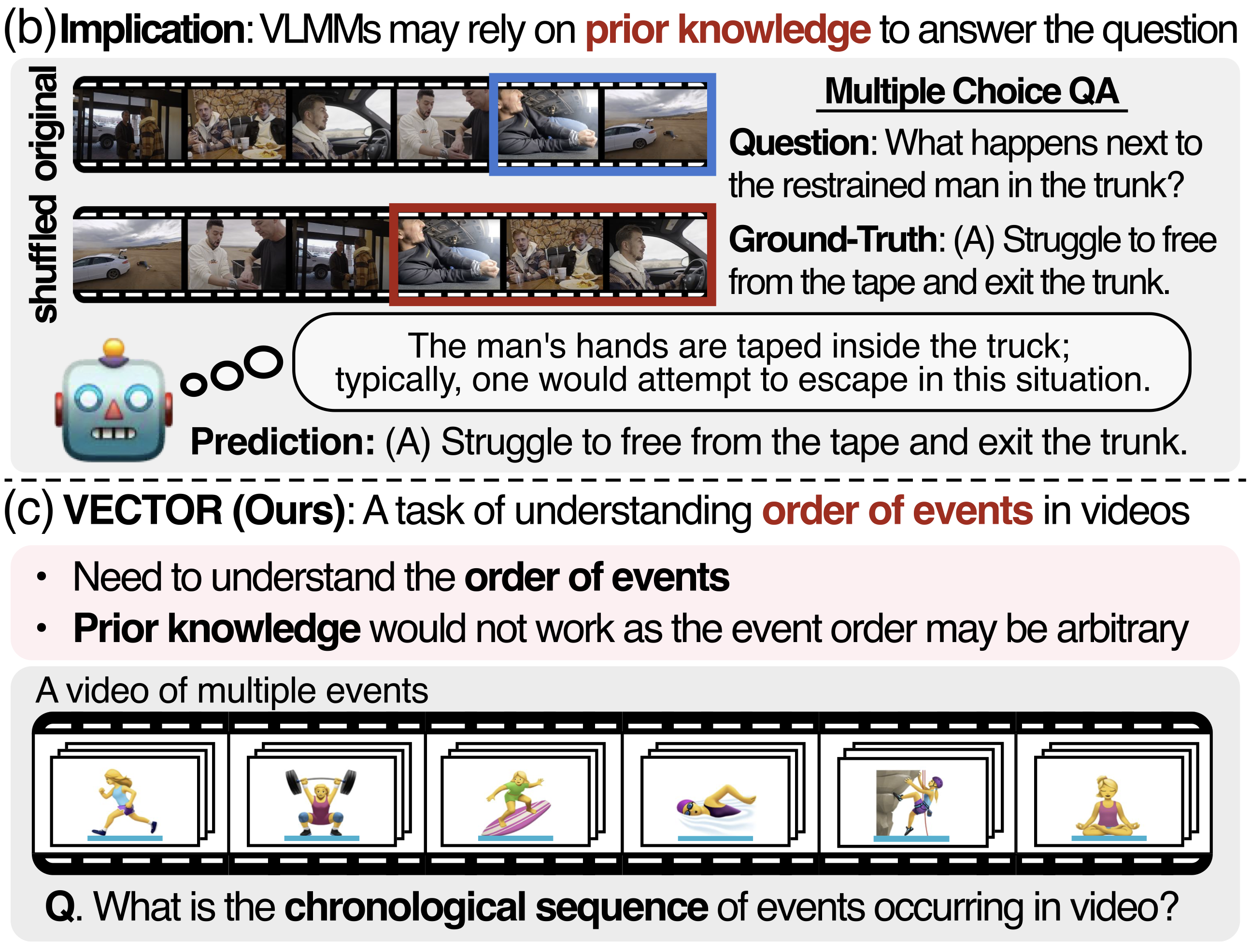

Hyeonbeom Choi* , Daechul Ahn*, Youhan Lee , Taewook Kang , Seongwon Cho, Jonghyun Choi arXiv, 2026 [paper (arXiv)] [bibtex] [project] |

|

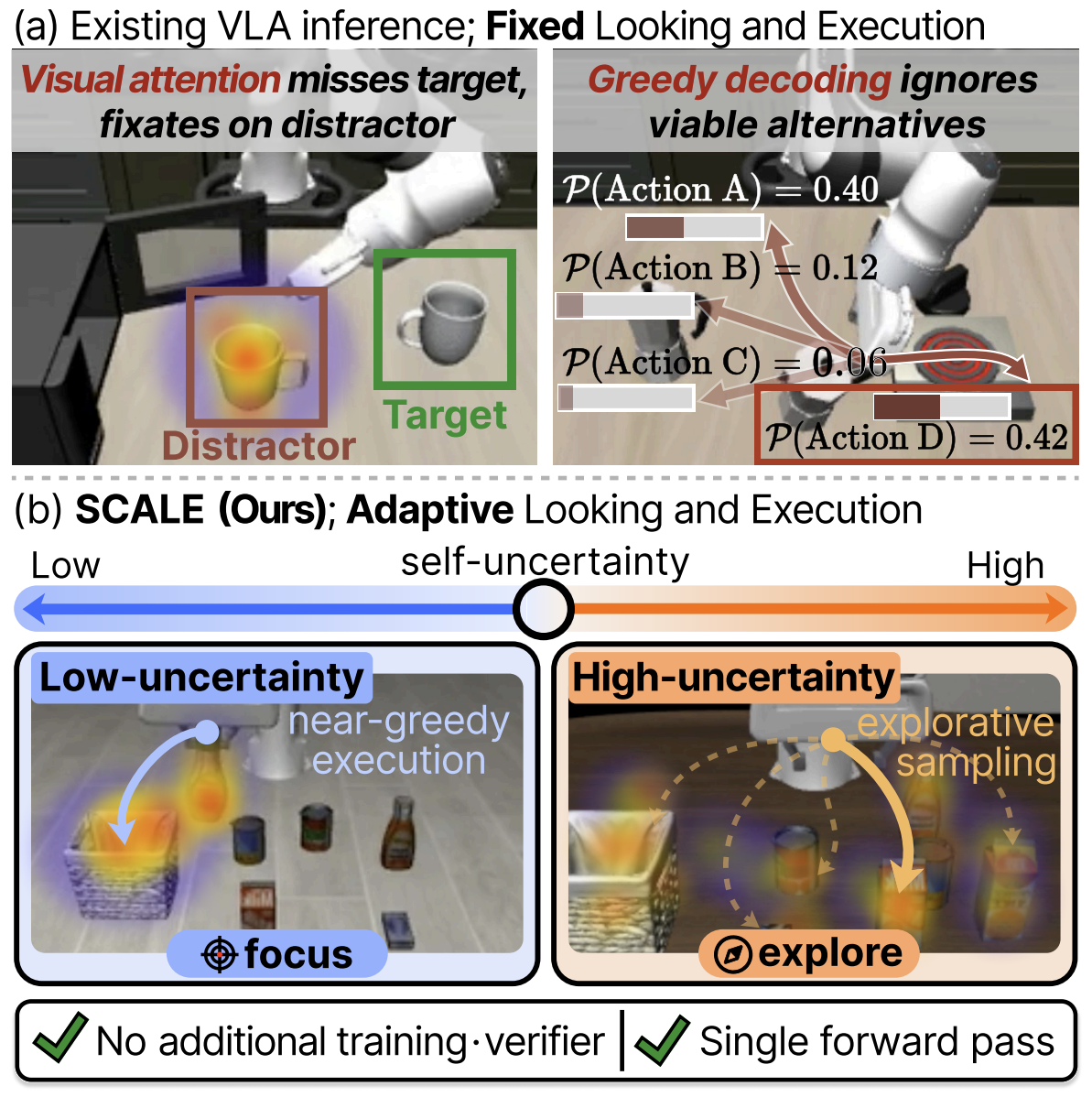

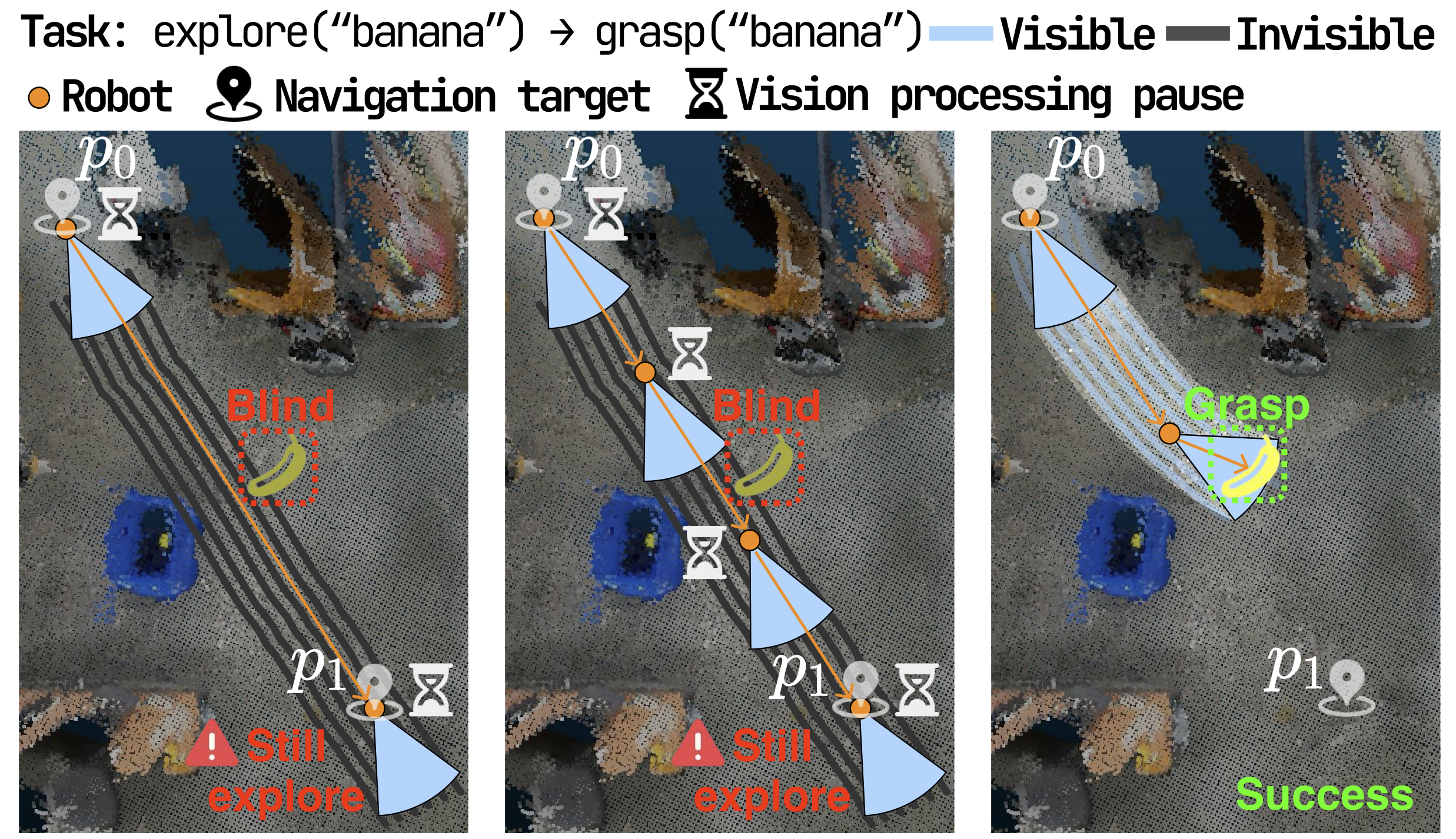

Seongwon Cho*, Daechul Ahn*, Donghyun Shin, Hyeonbeom Choi, San Kim, Jonghyun Choi ICRA 2026 (To appear) [pdf] [bibtex] [code] [project page] |

|

Daechul Ahn*, Yura Choi*, Hyeonbeom Choi* , Seongwon Cho, San Kim, Jonghyun Choi WACV 2026 (To appear) [paper (Coming soon)] |

|

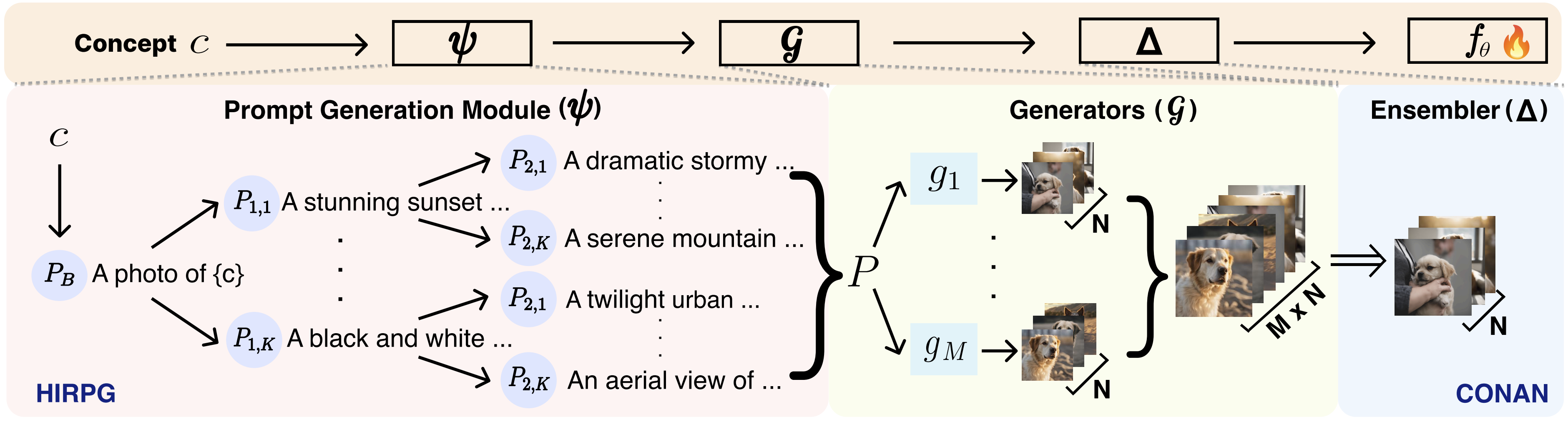

Minhyuk Seo, Seongwon Cho, Minjae Lee, Diganta Misra, Hyeonbeom Choi, Seon Joo Kim, Jonghyun Choi, TMLR 2025 [paper] |

-

1st Place in Continual Test-time Adaptation for Object Detection - VCL Workshop @ ICCV 2023 [technical report]

|

This template is from Jon Barron. |